I have skipped a lot of T-SQL Tuesdays, because either I did not have the time to write anything, or I felt I had nothing useful to write. That changes with edition #162, hosted by Tomaz Kastrun (b|t). He invites us to talk about data science in the time of ChatGPT.

I have skipped a lot of T-SQL Tuesdays, because either I did not have the time to write anything, or I felt I had nothing useful to write. That changes with edition #162, hosted by Tomaz Kastrun (b|t). He invites us to talk about data science in the time of ChatGPT.

To be fair, I am not going to cover that literal topic. But rather, I used his invitation as an excuse to do something that I have been wanting to do, and yet postponing, for a few months already. And that is to try to assess whether ChatGPT can be a valuable assistant when working with execution plans in SQL Server.

The dangers of trust

Several people that I have spoken to about ChatGPT seem to have an unbridled confidence in its answers. I personally believe that to be the biggest danger of this innovative chatbot. People use it to ask questions they don’t know the answer to, and the answer that ChatGPT gives them sounds credible enough that they take it for truth. Despite the disclaimer at the bottom of the screen:

“Free Research Preview. ChatGPT may produce inaccurate information about people, places, or facts. ChatGPT May 3 Version”

Sadly this disclaimer uses a very small font size. I believe it should be in huge bold flashing red letters on the top of the page, and people should only be allowed to use it after typing an explicit acknowledgement of the part where it warns about potentially producing inaccurate information.

The issue with Artificial Intelligence

I am not an AI expert. But I do try to understand at least the very rough basics of how AI works. And one thing that I have gleaned from that, is that we have arrived at the stage where nobody really understands why an AI algorithm gives a specific answer.

Sure, they can explain about the various models used. They can explain all about using known data to train the model, and then using more known data to verify the results of that training. But the models used have become so complex, and the amount of training data is now so huge, that given a specific concrete input, I doubt not a single AI expert could predict the exact answer, or explain the exact reason why a specific answer was generated.

When you ask me a question and the answer sounds suspect to you, you will ask me what I based my answer on. And if I cannot explain that, you will probably not believe my answer. And yet, the world now has a chatbot that pretends it can answer any question you ask it, but that can explain none of them.

For me, this is a good reason to be wary. Very wary.

Put it to the test

My suspicion was increased even more when my good friend Grant Fritchey (b|t) tested how much ChatGPT knows about Query Store, and received incorrect answers. I decided to do a similar experiment, but now (of course) for my own primary area of interest: execution plans and query tuning.

The tests … and the results

I made an account on the OpenAI website, so that I could test ChatGPT. And that was, at first, about all I could do. Whatever I asked ChatGPT, it sat silent for a long while and then I got an error message saying that it could not generate an answer, because the load on the system was too high. Well, so much for being a reliable assistant! Even if the tests turn out to show that ChatGPT gives only reliable and useful information (hint it doesn’t), even then it is still an unreliable assistant, if it only gives answers when not too many people are using it.

But a few days later, when I tried again, I did get answers. And oh boy, they confirmed all my fears. Not by being totally wrong … totally wrong would be okay, that would be clear. ChatGPT is like the boss I had a few jobs back, the one that knew exactly enough T-SQL to be extremely dangerous!

Theoretic questions

I first tested the waters by asking ChatGPT a few theoretic questions about specific execution plan operators. I was not looking for extensive and 100% complete answers. That would not be the expectation in normal human conversation, and ChatGPT is expected to mimic human conversation. But what I did hope to get was an answer that was correct and easy to understand.

Nested Loops

So my first question was about the Nested Loops operator:

So my first question was about the Nested Loops operator:

“What are the benefits and the downsides of a Nested Loops operator in a SQL Server execution plan?”

I have included a screenshot of the full answer to the right. (Click in it to enlarge it). But here, I want to highlight a few of the things that I notice in this answer.

First, a lot of the information in this answer is correct. The answer as a whole is nearly correct. But there are a few glaring mistakes, that I notice because I asked a question that I already know the answer to. If I had not known the answer, if I had genuinely been trying to use ChatGPT to increase my knowledge and understanding, then this answer sounds credible enough to make me believe the information given. Including the incorrect parts.

Second, the answer lacks some clarity. It refers to the two inputs of the Nested Loops operator by their official names: outer input and inner input. But for a beginner, someone most likely to ask this type of question, it may not be clear that the outer input is the input on the top in the graphical execution plan, and the inner input is the bottom input.

Third, the answer seems to assume that Nested Loops always joins data read directly from two tables. That may be true in classroom examples and in blog posts, but real execution plans for real queries in the actual world often use dozens of tables, and either or even both of the inputs of a Nested Loops could be an entire Christmas tree of operators calling operators.

Fourth, there is a small but important error in this sentence: “The Nested Loops operator is very efficient when one table has only a few rows and the other table is much larger, since it can use the smaller table as the outer loop and quickly filter down the results from the larger table”. For Nested Loops to be efficient, the inner (top) input has have only a few rows. Those do not need to come from a small table; a large table with a very selective filter, or a large table that is then aggregated by a column with only a few values, would also produce just a few rows. Also, if the other input is indeed a much larger table, then SQL Server can only filter down the results from that table ‘quickly’ if there is a supporting index. A very important requirement, that ChatGPT forgot to mention.

It does touch upon that requirement in the next sentence; “The Nested Loops operator can also be efficient when the join predicate is selective, meaning it eliminates many rows from the larger table, since it can quickly iterate through the smaller table and only access the larger table for the matching rows”. Yes, a selective filter helps. But, again, only when there is a supporting index. Without that index, you would get multiple executions of a scan operator on the large table, and that never performs well.

Okay, we finally get to the indexes in the next bullet point: “The Nested Loops operator can use indexes on the join columns to improve performance, especially if the indexes cover the necessary columns”. Well, technically Nested Loops cannot use indexes at all, but the operators on its two inputs can, so I’ll let that one slide. But what bugs me here is that ChatGPT presents index usage as an optional extra option. That is misleading. If the lower input of a Nested Loops is not very cheap (i.e. if it does not use indexes very effectively), then due to the repeated executions, the query will never be fast.

Then, the downsides. “The Nested Loops operator can cause a lot of I/O if the larger table is not in memory, since it may need to read each page of the table multiple times for each iteration”. Ouch, what a misleading statement! Yes, if the table(s) (or index(es)) used in the lower / outer input are not in memory, then there will be more I/O. But, except in extremely rare cases (and in those cases, it’s not the Nested Loops that’s the issue), pages will not be read multiple times. The first read will bring them in the buffer pool, where they will stay for at least the duration of the query.

And the worst part is yet to come: “The Nested Loops operator may not be able to use certain types of indexes, such as clustered indexes or non-covering indexes, which can limit its ability to optimize the join”. What a load of BS! A Nested Loops (or rather, the operators it calls on its inputs) definitely can use non-covering indexes – it usually chooses not to because that would also necessitate a lookup operator, and that would drive up the total cost, but there are cases where that is still the cheapest option for the execution plan, and then it will definitely be used. And using the clustered index on either input of a Nested Loops is in fact incredibly common, so I have no idea where ChatGPT got the idea that these cannot be used.

Table Spool

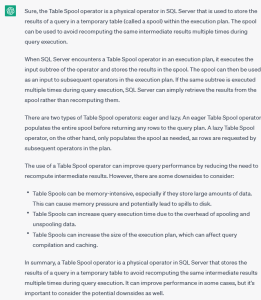

Okay, next test. Let’s focus on a lesser used operator, the Table Spool. And let’s focus the question on just its functionality:

Okay, next test. Let’s focus on a lesser used operator, the Table Spool. And let’s focus the question on just its functionality:

“Can you explain the function of a Table Spool operator?”

Again, see the picture to the right for the full answer.

Once more, the answer contains a dangerous mix of correct and incorrect elements. First, while it is correct that a Table Spool stores data in a temporary table, called a spool, what it stores is typically not the result of a query, but the result of a subtree of the execution plan. An intermediate result, that is needed more than once in the execution plan, and hence stored to avoid having to recompute it.

And yes, the second paragraph does indeed talk about subtrees, so now all of a sudden the answer is correct. But after the incorrect statement in the first paragraph, this gets confusing.

It gets worse when we come to the second half of the answer, with the bullet points. For instance, the first bullet point: “Table Spools can be memory-intensive, especially if they store large amounts of data. This can cause memory pressure and potentially lead to spills to disk”. Uhm, no. Not at all. As you yourself already said in the first paragraph, Table Spool stores its data in temporary tables. Not in memory. So there is no specific memory requirement (other than the standard buffer pool usage) for Table Spool. And exactly zero chance of spilling to disk. (Well, again, apart from the standard chance of a checkpoint happening while the process runs, which would write modified pages in the buffer pool to disk as it always does).

Then, it claims that “Table Spools can increase query execution time due to the overhead of spooling and unspooling data”. Well, yes, this is true. And I have seen cases where a Table Spool turned out to be more expensive than simply re-executing the same operators to produce the same data again. But those are exceptions. Usually, even though the Table Spool takes a lot of resources, that is still considerably less than re-executing a branch of the execution plan would cost.

The last bullet point is a statement that I don’t even understand: “Table Spools can increase the size of the execution plan, which can affect query compilation and caching”. Well, yeah, every operator adds one operator to the size of the execution plan. But in this case, the alternative would be to repeat a subtree of the execution plan. That’s going to be even more operators than this single one!

And finally, I was disappointed to not even see a single mention of the usage of Table Spool operators for Halloween protection.

Trick question

I decided to see if I could trick ChatGPT into coming up with a completely made-up answer if I asked about a completely made-up operator: “Next, how about the Transform Rows operator?”. (By the way, I love how ChatGPT remembers previous questions and hence can deduct from that context what this question means!)

Well done, ChatGPT! You didn’t fall for my trick, point for you!

The very rare Switch operator

But then, what about an operator that does exist, but is so rarely used in execution plans, and so utterly uninteresting even in those few plans where it does get used, that there is almost no information about it on the internet? I decided to ask about the Switch operator. (Sorry, no link to its description on the Execution Plan Reference – for exactly the reasons mentioned above, this operator is pretty low priority and I have not documented it yet. But if you must know, the Switch operator has two or more inputs, but executes only one of them; which one is executed is decided at run time based on a value computed elsewhere in the execution plan).

“What exactly does a Switch operator do in an execution plan?”

The answer I got to this question (not included in this blog to save some space) confused me at first. Until I realized that ChatGPT had decided to provide me an answer about the CASE expression in T-SQL. Yes, it even gave “an example of how the Switch operator can be used in a SQL query”, and then the example given was a valid query using a CASE expression!

So I decided to try to clarify my question a bit more:

So I decided to try to clarify my question a bit more:

“Sorry, I meant the Switch operator in a SQL Server execution plan, not in a query.”

And this time, I did get an answer. Oh boy, did I get an answer! It was so long that I needed two screenshots to capture it all. Once more, they are included here on the right, and you can click them to see them at full size.

When reading this answer, I do not even know where to begin debunking the utter nonsense that  ChatGPT has decided to serve up here. First, it claims that Switch routes rows from multiple input sources to multiple output sources. (Uhm … output source? Isn’t that called a destination anyway?). That’s simply not how execution plans work in SQL Server. An operator can have multiple inputs (child operators), but never multiple output (parents). Simply not supported by the architecture of execution plans.

ChatGPT has decided to serve up here. First, it claims that Switch routes rows from multiple input sources to multiple output sources. (Uhm … output source? Isn’t that called a destination anyway?). That’s simply not how execution plans work in SQL Server. An operator can have multiple inputs (child operators), but never multiple output (parents). Simply not supported by the architecture of execution plans.

It then names UNION ALL as an example where a Switch might be used. Again, no. A statement that uses UNION ALL will almost always use the Concatenation operator, and only in a few very rare cases it might use a Merge Join (with its Logical operation set to Concatenation) instead.

The image that is included does not even look like a SQL Server execution plan. At least not in any of the graphical tools that I have ever seen. It also once more pretends that a single operator can deliver rows to two destinations, which as I already said is completely untrue.

The explanation below the picture is even more confusing. The Sort operator sends rows to Switch, that is clear. But then, Switch apparently has two input streams, A and B. And then rows are routed to output stream A or B. This is not just confusing, it is complete nonsense.

The proof of the pudding …

… is in the eating. And the proof of ChatGPT’s understanding of query tuning is in giving it a practical exercise. So here is the final question I asked ChatGPT:

“How would you optimize this specific query:

SELECT s.SalesOrderID,

s.OrderDate,

s.CustomerID,

s.ProductID

FROM (SELECT sh.*,

sd.ProductId,

ROW_NUMBER() OVER (PARTITION BY sd.ModifiedDate

ORDER BY sd.ProductId) AS rn

FROM (SELECT TOP (1000) *

FROM Sales.SalesOrderDetail

ORDER BY SalesOrderDetailId DESC) AS sd

INNER JOIN Sales.SalesOrderHeader AS sh

ON sh.SalesOrderId = sd.SalesOrderId) AS s

WHERE s.rn = 1;

For the record, this is a query that I found in an old blog post from Adam Machanic, and then changed a bit; I often use it to showcase the many different aspects of Parallelism operators. I have never bothered trying to optimize it myself, but I figured that this query would be complex enough to give ChatGPT a real challenge, yet simple enough that it’s easy to verify the answer.

For the record, this is a query that I found in an old blog post from Adam Machanic, and then changed a bit; I often use it to showcase the many different aspects of Parallelism operators. I have never bothered trying to optimize it myself, but I figured that this query would be complex enough to give ChatGPT a real challenge, yet simple enough that it’s easy to verify the answer.

Well, let’s look at the answer that I was given. Once more cut into two screenshots, both included on the right.

Well, let’s look at the answer that I was given. Once more cut into two screenshots, both included on the right.

The generic description of techniques one can use for optimizing this query (or, rather, any query) include some good suggestions, but also some very weird ideas. Adding an index on a column that appears in a WHERE clause? First time I heard that idea (and hopefully the last time as well!). Also, there is no need to shorten the column list returned from the innermost subquery, as suggested by ChatGPT. This is a simplification that the query optimizer itself can handle. (It does of course remain important to limit the outermost SELECT to only the columns that are really needed).

But before diving too deep into the theoretic part, let’s actually eat some pudding!

I first executed the original query. It returned 14 rows, using 187 ms CPU time in an elapsed time of 14 ms, and using a total of 2,033 logical reads.

Then I executed the “optimized version” that ChatGPT suggested. It returned 96 rows, and that alone disqualifies it. After all, an optimized version is supposed to return the exact same results, not almost 7 times as many results. Besides, the 96 rows returned by the “optimized” query included only exactly one row from the original results, the other 13 were not even included. So even if it did indeed run faster, it would not be a valid optimization, since it returned incorrect results.

But the worst of it all is, it did not even execute any faster! Granted, the CPU time was down, from 187 ms to 63 ms. But the elapsed time was up, from 14 ms to 52 ms. And the total logical reads was up as well, from 2,033 to 2,795.

I ended the session by asking ChatGPT what logic it followed to arrive at the answer it gave me:

“Can you explain the thought process that made you come to this answer?”

“Can you explain the thought process that made you come to this answer?”

I will not dissect all the elements in this answer. By now, I believe that I have made my point already and there is no need to keep beating ChatGPT now that it is already down. But there is one part of the answer that really made me laugh:

“The next step is to review the execution plan for the query and identify any potential issues. In this case, the execution plan shows a clustered index scan on the SalesOrderDetail table, which suggests that the query is not using an appropriate index to retrieve the data.”

The first step is correct, as a general advise. But the rest is nonsense. ChatGPT claims that it has seen the execution plan. How? Where? When? I have presented it with a query, not with an execution plan. (I did consider giving it an execution plan, but I decided to first try giving it only a query. And I gave up after I saw what it did for that query.)

Conclusion

I have tried to verify whether ChatGPT can be a useful tool to assist when you try to optimize your query performance. One way to use such a tool would be to ask it questions to help you understand execution plans that include elements you have not seen before.

I have found that, when asking about execution plan operators for which a lot of information is available on the internet, ChatGPT will produce an answer that includes information from those many sources, but garbled together in a way that makes it look reliable, while in reality the information may be misleading or confusing.

Conversely, when asking about very rare operators for which little to no information is available on the internet, ChatGPT will happily make up a total garbage answer. (That at least has the benefit of being so completely wrong that most people will realize not to take it seriously).

Finally, I also asked it to help me optimize a query. It responded by presenting me with an optimized version of that same query. That looks incredibly helpful – until you realize that the optimized version of the query actually took more resources, and then also produced incorrect results!

In other words, I do not believe that ChatGPT is at this time ready to take over my job. It may be great at lots of things, but it totally sucks at helping people understand their execution plans or optimize their queries!